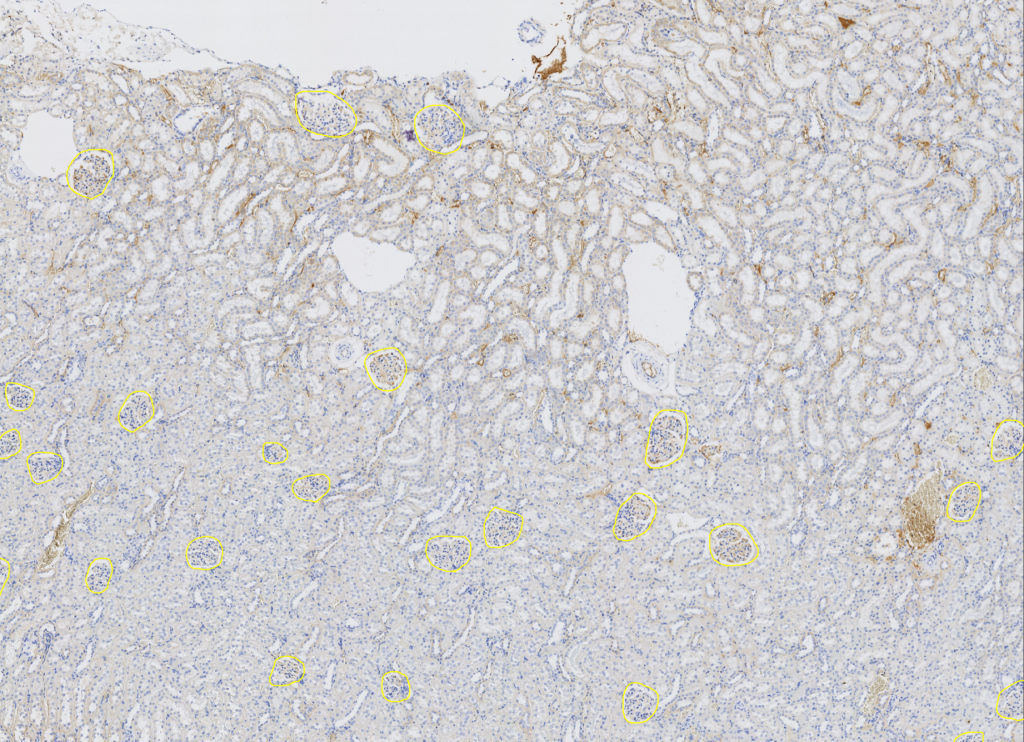

With deep learning object segmentation you can segment arbitrary heterogeneous objects you cannot segment with standard segmentation methods.

Good examples for this method are glomeruli or vessel detection.

Applying a pre-trained model

If you just want to reuse a pre-trained model (e.g. our glomeruli detection model), copy&paste the segmentation script into the script editor (under tools), exchange the model name, edit the image IDs you want to segment (or use a search query) and click on run.

It is recommended to draw a ROI annotation into the images (or creating an exclusion model) before running the script to limit the area where the algorithm searches for objects.

Remark: No Python / Tensorflow environment / NVidia Graphic-card is needed for object detection using an existing model, this is only needed for training a new deep learning model (see next section).

Script explenation

int[] images = [5483161, 234234] // either orbitIDs or load via query

Session s = DLSegment.buildSession("models/20180202_glomeruli_detection_noquant.pb")

OrbitModel segModel = OrbitModel.LoadFromInputStream(this.getClass().getResourceAsStream("/resource/testmodels/dlsegmentsplit.omo"))

OrbitModel roiModel = null;

Map<Integer,List<Shape>> segmentationsPerImage = DLSegment.generateSegmentationAnnotations(images, s, segModel, roiModel, true)

- Define the images (ImageIDs) on which the object detection should be performed (or use a query to search for all images e.g. within one dataset).

- The session refers to the deep learning model file. You can use one from the model zoo, or train your own (see next section).

- The segModel defines how the mask is transformed into objects. You can use the embedded dlsegmentsplit.omo for most cases. If you want modify it to e.g. define the minimal object size or define if overlapping objects should be split into two objects or not.

- The roiModel can be used to define the ROI by exclusion model, set to null if not needed (e.g. ROI defined by manual annotations). It is a model which contains the exclusion model. The main model is ignored here.

- The last line performs the actual deep learning object detection using Tensorflow in the back. If storeAnnotations (last parameter) is set to true, the object shapes will be stored as rare event annotations in the database. To load the annotations: Activate the Tools->Rare Object Detection tool, and select it in the tabs on the right side. Click on load to load the annotations.

Alternatively set storeAnnotations to false to process the found object shapes in a different way. The result is a map of shapes (polygons) per imageID. - If you have the rare object detection module active you can define a ‘dummy’ model with different classes, e.g. sick and healthy. Then click on the first annotation and assign a class to the annotation using the numbers ‘1’ for the first class,’2′ for the second class, and so on.

Click on statistics to see the statistics of the assignment. This can be very useful to e.g. grade a disease of the found objects. See help of the rare object detection module for details.

Training a new model

First a training set has to be created. For this, many objects have to to be annotated manually first. Here it is important to draw the exact outline of the objects using the annotation tools.

After annotating many objects from many images you have to create mask images which will be used as training set for the deep learning framework. The masks out of the annotated images can be created using this script.

For training a new Tensorflow model the training Python scripts can be used. The readme.md there explains how to use it. Feel free to contact me if you need further help with this.